Stop Researching. Start Deciding.

Why AI makes you slower at decisions (and how to fix it)

Earlier this year, I started working with Sarah, a VP Product at a Series C SaaS company, in both an advisory and coaching capacity. In our first advisory session with her leadership team, we reviewed their strategic initiatives. In our first one-on-one coaching session the next week, Sarah showed me something that had her worried.

Her Notion workspace had 47 research spikes documented over six months. Each one meticulously captured AI conversation transcripts, key findings, and useful links.

“I’ve gotten so good at gathering insights,” she told me. “I can research any topic in 15 minutes now. But I’m not making decisions any faster.”

She pulled up a spike from four months earlier about AI coding tools and development velocity. Comprehensive notes. Thoughtful analysis. I was impressed. She then mentioned that the topic had been added to the agenda for the exec. team meeting next week, but does not feel prepared.

“I knew I’d done this before,” she said, scrolling through her notes, “but I couldn’t remember what I’d concluded. Just what I’d learned. So I updated my notes with the latest information to prepare for the meeting.”

That’s when I realized: Sarah had optimized for exploration, not conviction.

The Wake-Up Call

Two weeks later, during our advisory check-in with the executive team, Sarah’s CEO shared a competitor’s product announcement. Three features Sarah’s team had been planning for Q3 and Q4 had already been shipped.

After the meeting, the CEO pulled Sarah aside: “They’re using AI to build faster. Why aren’t we? What’s our strategy here?”

In our coaching session that afternoon, Sarah was visibly frustrated. She pulled up her research from four months ago and walked me through them:

Research Topic: “How should we think about AI-generated code in our development workflow?”

Key Findings:

GitHub Copilot can increase developer productivity 30-50%

Cursor and other AI coding tools accelerating adoption

Some companies shipping features in days instead of weeks

Quality concerns around AI-generated code

Security/licensing questions still evolving

Testing becomes even more critical

Conclusion: “Worth revisiting as tools mature and best practices emerge”

The notes told her what AI coding tools could do. They didn’t tell her what her company should do about it.

“I have all this research,” Sarah said, “but when the CEO asks ‘what should we do?’ I don’t have an answer.”

The Real Problem

In our next coaching session, we dug into why this kept happening.

Sarah’s research spikes were thorough. But they were optimized for learning, not deciding.

When she documented her exploration, she captured:

What the technology could do

What other companies were doing

What the risks and trade-offs were

What integrating the technology entailed

Typical costs and timelines

What she didn’t capture:

What she believed

Why she believed it

What would change her mind

Without decision architecture, every spike was a one-time knowledge exploration. When the topic resurfaced months later, the research had to start over because there was no scaffold to build on.

“You’re creating a library of things you’ve learned,” I told her, “not a framework for what you believe and what needs to be true to act.”

The Complexity She Was Actually Facing

As we prepared for the next executive advisory session, Sarah and I mapped out the real tensions in the AI coding tools decision. This wasn’t simple.

Engineering’s Perspective: “We want to use these tools—they’re genuinely powerful. But we need guard rails. Who’s responsible when AI-generated code has a security vulnerability? What happens to our code review standards?”

Sarah’s Product Perspective: “This isn’t just about velocity. If we can generate code faster, we have a choice: ship more features, or finally address the technical debt that’s been slowing us down for two years. Both matter. We can’t do both. Which creates more value?”

Quality/Engineering Lead’s Concern: “Our test coverage is already struggling to keep up with our pace. If we’re generating code faster, how do we ensure we’re not just shipping bugs faster? Our testing infrastructure wasn’t built for AI-speed development.”

CEO’s Pressure: “Our competitors are moving at AI speed. We need to match that or we’ll fall behind. But I also don’t want to sacrifice the quality that’s been our competitive advantage.”

Customer Reality: “They don’t care how it’s built. They care that it works reliably and solves their problems.”

In our advisory session, I could see the tension in the room. Everyone wanted to move faster. No one wanted to break things. The decision felt urgent and overwhelming at the same time.

“This is a classic messy middle problem,” I told the team. “You can’t just adopt everything because competitors are. You can’t ignore it because there are risks. You need conviction about what’s right for your situation.”

What We Changed: Decision-First Spikes

In our next coaching session, Sarah and I redesigned her approach. Before her next exploration, we would frame the decision first.

I walked her through three questions:

1. What decision does this inform?

Sarah’s first instinct: “Should we adopt AI coding tools?”

“Too vague,” I pushed back. “What are the actual choices on the table?”

After some discussion, Sarah framed it more precisely:

Decision: How aggressively should we adopt AI coding tools in our development workflow: conservative (limited pilot), moderate (team-by-team adoption with guard rails), or aggressive (company-wide standard with velocity targets)?

Now we had something specific to build conviction around.

2. What would need to be true for each path?

We mapped out what Sarah would need to believe for each approach:

For Conservative Approach (limited pilot):

Current velocity is sufficient for competitive positioning

Quality and security risks outweigh speed benefits in the short term

We can learn from other companies’ mistakes before scaling

Our technical debt problem won’t get worse while we wait

For Moderate Approach (team-by-team with guard rails):

Some domains are safer for AI acceleration than others (new features vs. core infrastructure)

We can establish and enforce guard rails as we scale

Mixed adoption won’t create code quality inconsistency

We can dedicate AI velocity gains to EITHER new features OR tech debt systematically

For Aggressive Approach (company-wide with velocity targets):

Speed to market is an existential competitive advantage right now

We can manage quality/security with improved testing infrastructure

Team is ready for rapid workflow change

We can use velocity gains for BOTH new features AND tech debt

“This is good,” Sarah said, “but how do I figure out which approach to recommend?”

3. What would make you change your mind?

We identified the evidence that would push the decision one way or another:

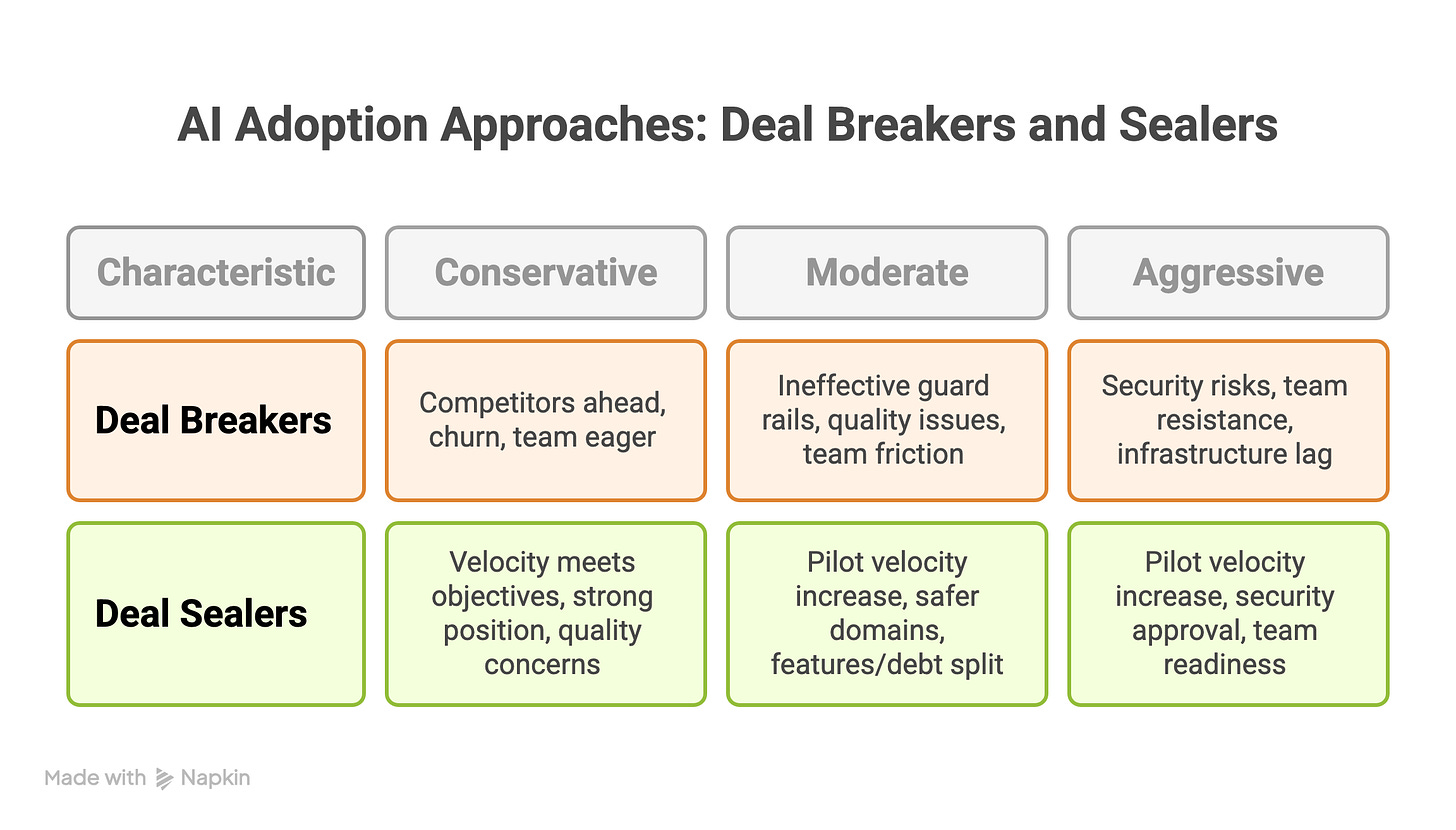

Conservative Approach:

Deal Breakers:

Competitors demonstrably pulling 2+ quarters ahead on features

Customer churn attributable to slow feature delivery

Team survey shows >70% ready and eager for AI tools

Deal Sealers:

Current velocity meets business objectives

Competitive positioning remains strong

Quality or security concerns emerge in other companies’ AI adoption

Team prefers to learn from others before committing

Moderate Approach:

Deal Breakers:

Cannot establish effective guard rails that work consistently

Quality inconsistency across AI-enabled vs. non-enabled teams

Split adoption creates significant team friction or confusion

Deal Sealers:

Pilot shows 30-40% velocity increase with quality maintained

Some domains prove safer for AI acceleration than others

60/40 features/debt split achievable with structured discipline

Team readiness mixed but workable (50-70% positive)

Aggressive Approach:

Deal Breakers:

Security audit reveals unacceptable risks in AI-generated code

More than 30% of team strongly resists workflow change

Testing infrastructure cannot keep pace with code velocity

Legal identifies licensing/IP concerns we cannot mitigate

Deal Sealers:

Pilot shows 40%+ velocity increase without quality degradation

Security approves with manageable guard rails

Team readiness above 80%

60/40 features/debt split works naturally without heavy oversight

Competitive pressure demands immediate response

“Now this,” Sarah said, “this I can actually work with. Each path has clear conditions.”

The Structure That Persisted

After Sarah did her research spike with AI (which took about 20 minutes), she didn’t just document findings. She captured the decision scaffold:

Decision Being Informed:

How aggressively to adopt AI coding tools: conservative (limited pilot), moderate (team-by-team), or aggressive (company-wide)

Evidence Gathered:

3 similar-stage companies using AI tools: 2 report 35-50% velocity increase, 1 had quality issues and pulled back

Security concerns manageable with proper code review + automated scanning

Testing infrastructure needs upgrade regardless (already a Q3 initiative)

Team survey: 65% excited, 25% cautiously optimistic, 10% skeptical

Current tech debt backlog: estimated 8 engineer-months of work

Legal: licensing concerns exist but manageable with tool selection

Security scanning cost: $50K/year (already budgeted)

What This Evidence Tells Us:

Team resistance lower than feared (10% vs. 30% threshold) → doesn’t rule out any approach

Velocity gains are real but quality risks exist → moderate makes sense as starting point

Security/legal concerns are manageable → doesn’t block any path

Testing infrastructure needs work but timeline aligns → not a blocker

Still Need to Validate:

Can we maintain quality with higher velocity? (need pilot data)

Can we establish discipline to split gains 60/40 features/debt? (need commitment from leadership)

Will our current testing infrastructure support moderate adoption? (need technical assessment)

Recommendation:

Start with moderate approach:

60-day pilot with 2 teams (1 on new features, 1 on tech debt refactoring)

Measure velocity, quality, team satisfaction

Test whether we can maintain features vs. debt allocation discipline

Decision point at day 60: stay moderate or move to aggressive based on pilot results

Next Steps When Revisited: Not “what’s changed in AI tools?” but:

Did pilot validate velocity gains without quality loss?

Could we maintain features/debt discipline with clear guidelines?

Have competitive dynamics changed our urgency?

Is testing infrastructure upgrade complete?

Has team sentiment shifted after seeing pilot results?

If you’re facing similar challenges—research without conviction, stakeholder ambiguity, or decisions that keep resurfacing—this framework can help.

The Advisory Session That Changed Everything

Six weeks later, we reconvened the executive team for Sarah’s recommendation.

Sarah opened: “Thank you for your time. Six weeks ago, you asked about our AI coding strategy. Today I’m recommending a path forward based on data from our pilot.”

Her presentation was crisp:

What We Tested:

2 teams, 60 days

Team A: Used AI tools for new feature development

Team B: Used AI tools for tech debt reduction

Results:

Team A velocity: +42% (shipped 2.3x more feature work)

Team B velocity: +38% (cleared 2.1x more tech debt items)

Bug escape rate: No increase (actually slight decrease)

Security incidents: Zero (with new scanning in place)

Team satisfaction: 8.2/10 (up from baseline 7.1/10)

Code review time: +15% (expected, manageable)

What We Learned:

The velocity gains are real and sustainable

Quality doesn’t suffer with proper guard rails

Teams can maintain discipline on features vs. debt allocation

Testing infrastructure needs the planned upgrade (doesn’t block this)

Recommendation: Moderate Approach with Q3 Expansion

Now (Q2):

Expand to 4 more teams (total 6 of 12 teams)

Maintain 60/40 split: features/tech debt

Implement guard rails company-wide

Q3 Decision Point:

If 6-team results hold → expand to aggressive (all 12 teams)

If quality degrades → stay at moderate

Reassess features/debt split based on Q2 outcomes

What Would Change This Recommendation:

Quality metrics decline in expanded pilot

Testing infrastructure upgrade delays

Security incident related to AI-generated code

Team resistance increases above 20%

The CEO leaned forward: “How confident are you in this?”

Sarah didn’t hesitate: “Highly confident in the moderate approach. The pilot data is strong. We’ll know in Q3 whether aggressive makes sense based on what we learn from 6 teams.”

The CFO asked: “What’s the ROI?”

“At moderate adoption,” Sarah answered, “we’re looking at 4-6 additional engineer-months of capacity per quarter. We can use that to ship 2-3 more features AND clear roughly 40% of our tech debt backlog over the next year. At aggressive adoption, those numbers roughly double.”

The decision took five minutes. Approved unanimously.

After the meeting, the CEO pulled me aside: “Sarah’s gotten really decisive. What changed?”

The Results Three Months Later

In our Q3 advisory check-in, Sarah shared the outcomes:

Moderate Adoption (6 of 12 teams):

Velocity gains holding at 40% average

Quality metrics stable

Tech debt backlog reduced 35%

3 features shipped that wouldn’t have fit in timeline otherwise

Team satisfaction remained high

Organizational Learning: The company:

Established AI coding standards and guard rails

Created “velocity allocation” framework (features vs. debt)

Upgraded testing infrastructure (already needed, now critical)

Developed training program for remaining teams

Built confidence in using AI as accelerator, not crutch

Most importantly: Sarah’s credibility as a product leader grew significantly.

The CEO’s feedback in her review: “Sarah has developed the ability to navigate complex, high-stakes decisions with clarity and conviction. She doesn’t just present options—she builds cases backed by data and clear decision frameworks.”

Why This Approach Works

Sarah’s transformation wasn’t about researching faster. It was about building conviction systematically.

Her old approach:

Gather information

Document findings

Hope insights lead to decisions

Her new approach:

Frame the decision first

Identify what needs to be true

Gather evidence to validate or invalidate beliefs

Build conviction that persists over time

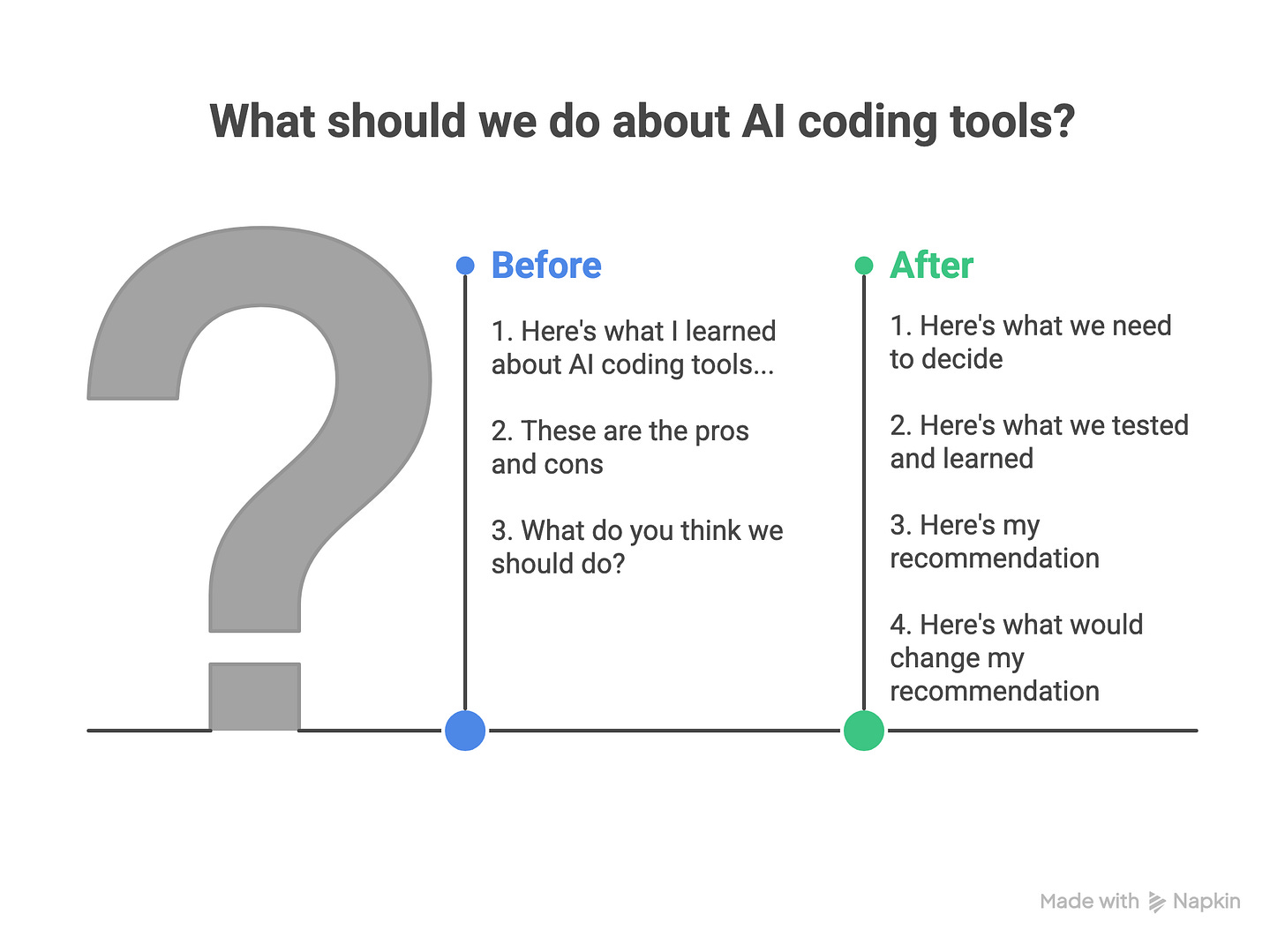

The difference for her stakeholders was dramatic.

Before: “Here’s what I learned about AI coding tools. There are pros and cons. What do you think we should do?”

After: “Here’s what we need to decide, here’s what we tested, here’s what we learned, here’s my recommendation, and here’s what would make me change my mind.”

The first creates ambiguity. The second creates confidence.

When to Use Decision-First Spikes

In our coaching work together, Sarah now uses this approach selectively.

She uses decision-first spikes when:

Multiple stakeholders need to align

The decision involves significant investment (time, money, or political capital)

The topic will resurface in 3-6 months

She needs conviction, not just information

There are multiple valid paths depending on what you believe

She skips it when:

She’s satisfying curiosity with no decision pending

The decision is clearly hers alone and low-stakes

She needs landscape understanding before she can even frame a decision

The exploration is for learning, not deciding

The Real Leverage of AI

In our coaching sessions, Sarah and I often discuss what AI actually enables.

“AI doesn’t just help me explore faster,” Sarah told me recently. “It helps me think more clearly about what I’m trying to decide.”

She’s right. Here’s why:

The discipline of framing “what would need to be true” before you start forces clarity. You can’t hide behind vague goals or open-ended exploration.

The speed of getting answers lets you test multiple decision frames quickly. If your first frame doesn’t yield useful validation criteria, you can reframe and try again in minutes, not days.

The ability to have AI stress-test your logic builds conviction faster. Sarah now regularly asks: “What am I missing in this decision framework?” or “What would a skeptic challenge in my assumptions?” She gets thoughtful pushback in real time, which strengthens her thinking before she enters a stakeholder conversation.

This is the real power of AI-augmented strategic thinking. It’s not just execution velocity. It’s clarity velocity.

Try This

If you use AI for strategic exploration, here’s what I recommend:

Next time you’re about to ask AI a research question, pause.

Ask yourself: “What decision am I actually trying to inform?”

Then ask: “What would need to be true to say yes? What would make me say no?”

Then ask AI to help you validate or invalidate those beliefs.

See if it changes what you learn and how you use it.

I suspect you’ll find what Sarah found: you’re not just exploring faster. You’re deciding smarter.

And in the messy middle, that’s what actually matters.

I work with leaders operating in resource constrained environments in both advisory and coaching capacities. If you want to build this capability in yourself or your team, let’s talk.

What decisions are you circling without clear conviction? What would need to be true to move forward? I’d love to hear what you’re working through.